NEWSROOM

Here we share with you various MCNEX stories.

- TitleThe eyes of autonomous driving, vehicle sensing cameras DATE 2024-09-05

-

The eyes of autonomous driving, the core of safer and more intelligent autonomous driving

In an ever-evolving automotive technology landscape, safety continues to drive innovation. Among the many advancements transforming the way we drive, automotive detection cameras stand out as a pivotal technology that improves vehicle safety and performance and paves the way for fully autonomous driving.

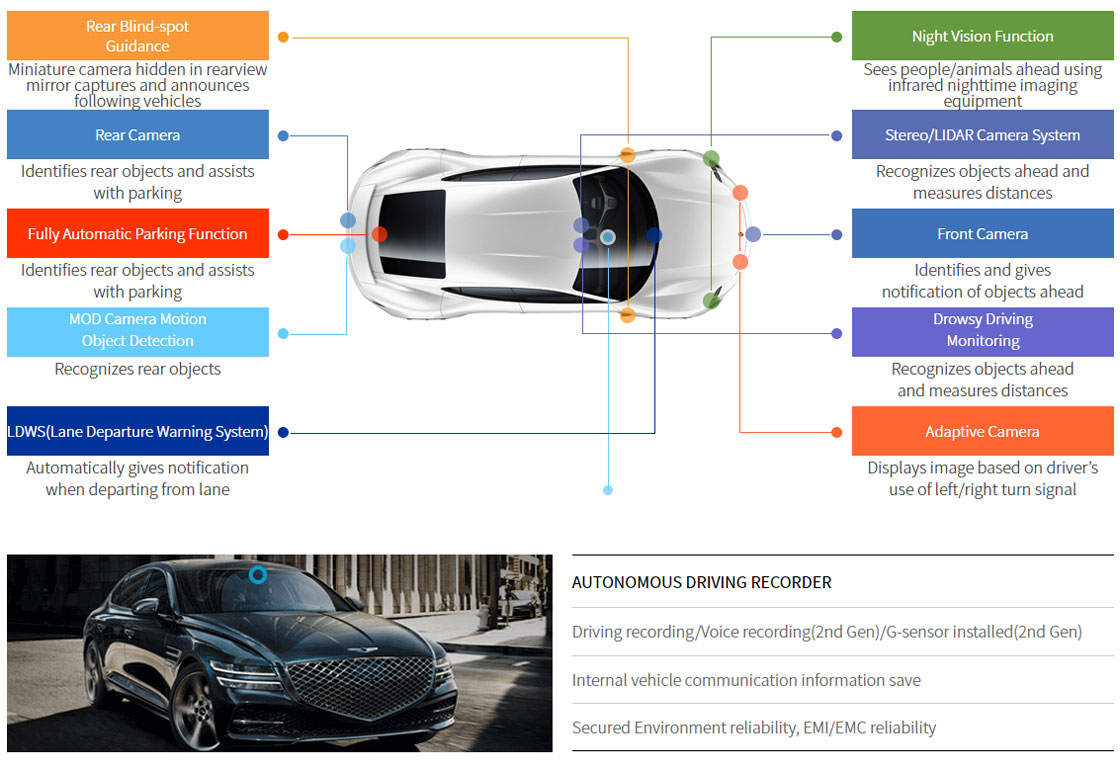

Automotive detection cameras are advanced imaging devices integrated into vehicles to monitor and interpret their surroundings. Unlike traditional cameras, these sensors are equipped with sophisticated technologies that enable high-resolution image capture, depth sensing, object recognition, and effective operation in a variety of environmental conditions. They serve as the “eyes” of modern vehicles, providing critical data that supports a wide range of functions, from basic driver assistance to complex autonomous driving tasks.

Evolution of self-driving cameras

The journey of car detection cameras began with simple rear-view cameras designed to assist with parking. Over the years, advances in sensor technology, image processing, and artificial intelligence have greatly expanded its capabilities. Today’s sensing cameras are an essential component of advanced driver assistance systems (ADAS) and autonomous vehicles, contributing to features such as lane departure warning, traffic sign recognition, pedestrian detection, and adaptive cruise control.

Essentially, car detection cameras are advanced optical sensors built into the vehicle that capture real-time images and video of the surrounding environment. They are integrated into systems that use software algorithms, machine learning and artificial intelligence to interpret the data they collect, detecting other vehicles, pedestrians, obstacles, road signs and lane markings, and monitoring driver behavior. These systems include radar, It works in conjunction with other sensors, such as ultrasonic sensors and LiDAR, to provide a comprehensive understanding of the car's surroundings.

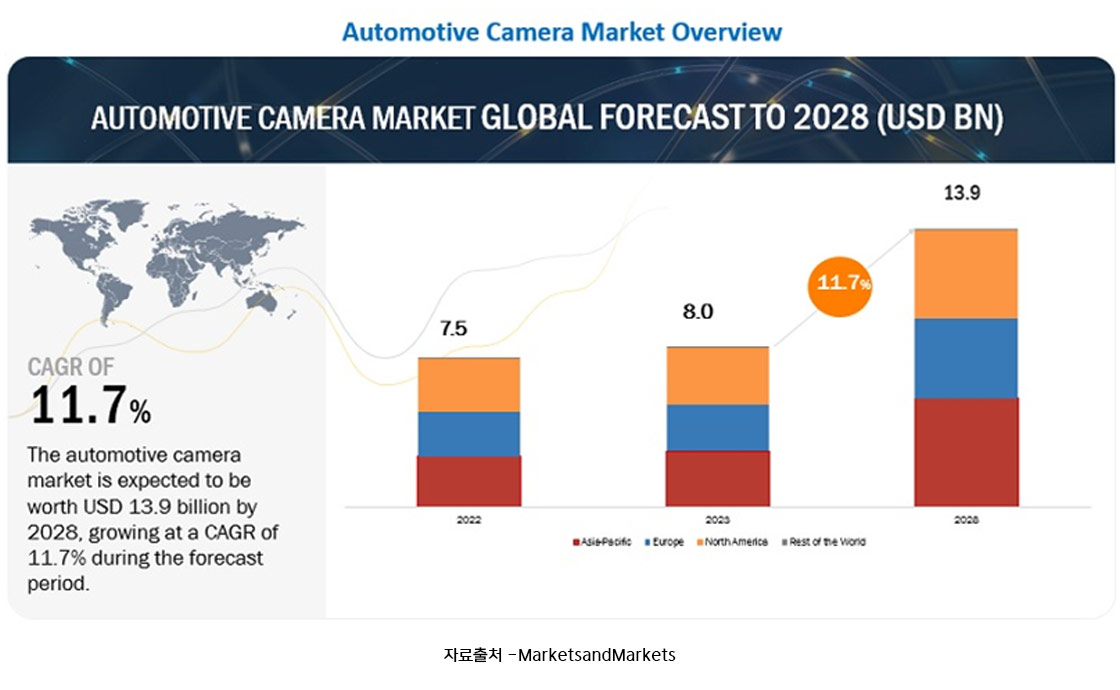

The automotive camera market is expected to grow by an average of 11.7% per year from $8 billion in 2023 to $13.9 billion in 2028. Camera systems and technologies are being applied in a variety of applications to control vehicles and improve the driving experience.

MCNEX-related technology

Types of sensing cameras in automotive applications

In automotive applications, several types of detection cameras are used to enhance vehicle safety, support driver assistance systems, and enable autonomous driving. Each type of camera has specific features and advantages, making it suitable for different aspects of vehicle operation.

1. Mono Camera: A mono camera is a single lens camera that provides detailed images of the vehicle's surroundings. Commonly used for tasks such as lane detection, traffic sign recognition, and pedestrian detection. Mono cameras are widely used in modern vehicles due to their simplicity and provide a cost-effective solution for basic driver assistance functions.

2. Stereo Camera: A stereo camera uses two lenses to capture depth information similar to human binocular vision. This allows the vehicle to recognize the distance of objects, making it very useful for applications such as adaptive cruise control, collision avoidance, and 3D mapping. Stereo cameras provide a more detailed understanding of the environment compared to mono cameras.

3. Infrared cameras: Infrared (IR) cameras detect heat signatures, making them especially useful in low-light or night driving conditions. These cameras are essential for pedestrian detection because they can identify people and animals in the dark that cannot be seen with the naked eye. IR cameras are also used in driver monitoring systems to detect drowsiness or distraction.

4. 360-degree surround view camera: This camera combines images from multiple cameras placed around the vehicle to provide a bird's eye view of the vehicle's surroundings. This technology is useful for parking assistance, maneuvering in tight spaces, and improving overall situational awareness.

5. Lidar cameras (light detection and ranging): Lidar systems use laser pulses to create high-resolution 3D maps of the vehicle's surroundings. Although not traditional cameras, some LiDAR systems incorporate imaging capabilities.

6. Time-of-Flight (ToF) cameras: ToF cameras measure the time it takes for light to reflect off an object and return to the sensor, creating a 3D map of the environment that includes depth information.

These different types of sensing cameras play an important role in modern cars, each contributing uniquely to the path towards safety, convenience and full vehicle autonomy. As technology advances, these systems will become more sophisticated and the automotive industry will move closer to fully autonomous driving, where vehicles can operate safely without human intervention.

The role of sensing cameras in autonomous driving

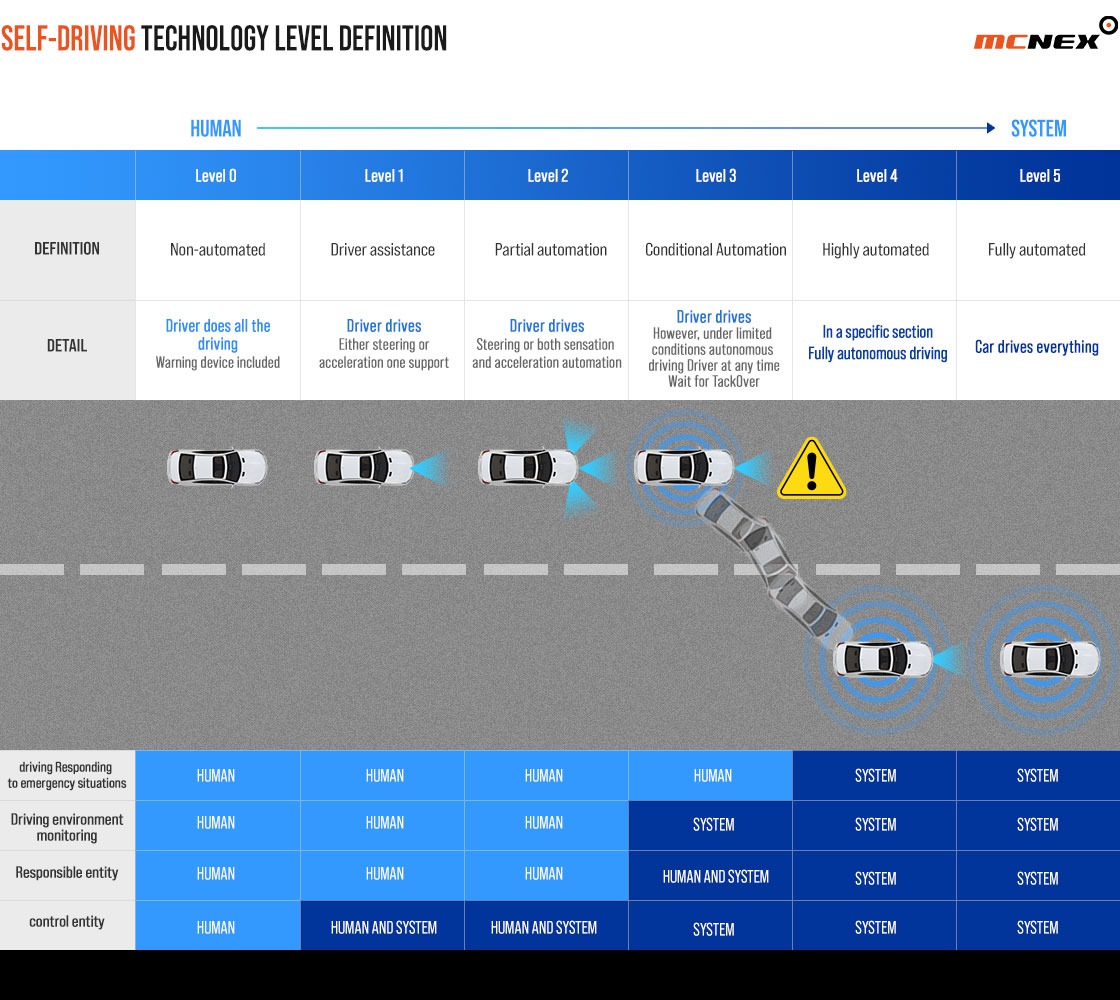

Sensing cameras are becoming more important as the automotive industry transitions to fully autonomous vehicles. These cameras can act as the eyes of autonomous systems to interpret and navigate the world, and understanding their role is critical to understanding their impact on future mobility. Here are ways to contribute to different levels of autonomous driving

Level 1-2: Driver assistance systems

In levels 1 to 2 autonomy, the vehicle provides driver assistance but still requires human control. Detection cameras enable features such as lane-keep assist, automatic emergency braking, and adaptive cruise control. These systems improve safety but rely on driver participation.

1. Mono camera detects lane markings and provides warning if vehicle drifts or crosses lanes unintentionally.

2. Main functions: object detection, forward collision warning, traffic sign recognition.

Level 3-4: Conditional to highly automated

At this level, the vehicle can take on more driving responsibilities, such as driving on the highway or handling traffic jams. Cameras work together with other sensors, such as radar and LiDAR, to map the environment and make complex driving decisions.

1. Stereo camera system that recognizes the distance to the vehicle ahead and automatically adjusts speed according to traffic conditions.

2. Main functions: autonomous lane change, pedestrian detection, high-speed emergency braking.

Level 5: Fully automated

In Level 5 autonomy, the vehicle drives completely autonomously without human intervention. Detection cameras are part of a sophisticated sensor array that allows vehicles to navigate any environment, detect hazards and respond appropriately.

1.Using stereo and infrared cameras, cars can identify pedestrians, cyclists, and vehicles in urban environments to navigate intersections, stop at crosswalks, and adapt to unpredictable situations.

2. Main functions: Real-time decision-making, advanced path planning, and obstacle avoidance with 360-degree awareness.

Integration with Other Sensor Technologies

Automotive detection cameras provide extensive visual data, but combining them with other sensor technologies improves overall system reliability and performance.

Sensor Fusion

Sensor fusion involves integrating data from multiple sensor types to gain a comprehensive and accurate understanding of the vehicle environment. Commonly fused sensors include:

1. LiDAR (Light Detection and Ranging): Uses laser pulses to measure distances with extreme precision and create detailed 3D maps.

2. Radar (Radio Detection and Ranging): Effective for detecting objects and measuring speed, especially useful in adverse weather conditions.

3. Ultrasonic Sensors: Short-range sensors ideal for parking assistance and obstacle detection at low speeds.

Advantages of Sensor Fusion

1. Redundancy and Reliability:Multiple sensors corroborate data, reducing the likelihood of errors and system failures.

2. Enhanced Perception:Combines the benefits of multiple sensors, such as the detailed images of a camera and the all-weather capabilities of radar.

3. Enhanced Decision Making:Provides autonomous systems with rich, multifaceted data for safe, informed navigation.

Waymo's self-driving cars use a broad suite of sensors, including cameras, LiDAR, and radar, to provide powerful perception and navigation capabilities in a variety of driving conditions.