NEWSROOM

Here we share with you various MCNEX stories.

- TitleThe future of autonomous driving! Sensor Fusion System DATE 2024-03-15

-

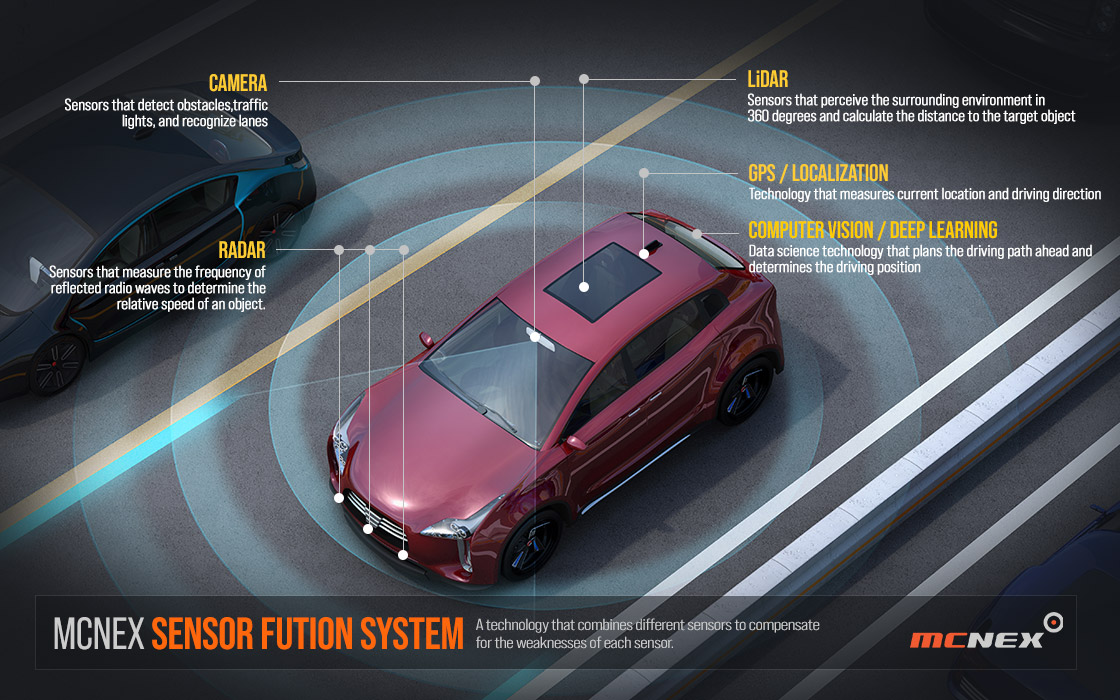

MCNEX Sensor Fusion System that illuminates the future of autonomous driving

As autonomous vehicles become increasingly more a reality today, sensor fusion technology stands at the heart of this advancement. To drive safely on the road, it is necessary to recognize and understand the environment, and the core technology that makes this possible is sensor fusion. Let's learn about this technology, which can be called the 'brain' of autonomous vehicles that interpret complex road environments and respond appropriately.

What is sensor fusion?

The ADAS system is divided into three domains: 'Sense,' 'Think,' and 'Act.' 'Sense' involves the use of sensors such as cameras, radar, ultrasonic, and LiDAR; 'Think' refers to the processors that handle the information received from the sensors and the algorithms that integrate it; 'Act' means controlling the vehicle or visually transmitting the collected and processed information.

Sensor fusion means combining the aforementioned sensors cameras, radar, LiDAR, ultrasonic or integrating them with other types of sensors. Since each sensor has its role and its own set of strengths and weaknesses, it is necessary to combine the advantages of each sensor. Especially in autonomous driving, where safety is the paramount value, reliable detection is needed even in difficult conditions such as nighttime, rain, snow, or strong backlight.

1. Data Acquisition

▶ Each sensor collects information about the surrounding environment.

▶ Cameras provide visual images, capturing objects and scenes within the wavelength range of light.

▶ LiDAR measures distance and shape as 3D point clouds by emitting laser beams around and detecting the reflected light. Radar uses radio waves to detect the distance and speed of objects.

▶ Ultrasonic sensors emit sound waves and detect signals reflected back to identify nearby objects.

▶ GPS provides location information, and INS (Inertial Navigation System) tracks the direction and speed of the vehicle.

2. Data Processing and Integration

▶ The collected raw data undergo preprocessing such as noise reduction, filtering, and scaling.

▶ Afterwards, a sensor fusion algorithm combines this data. The algorithm utilizes the strengths of each sensor and compensates for their weaknesses to integrate them into one accurate piece of information.

▶ The fusion algorithm can operate at various levels from low-level fusion to high-level fusion. Low-level fusion directly combines raw data, while high-level fusion combines features or decisions that have already been extracted from the data of each sensor.

▶ This process can use various mathematical models and algorithms, such as Kalman filters, Bayesian networks, artificial neural networks, etc.

3. Decision and Action

▶ The integrated data is transmitted to the vehicle's decision-making system.

▶ Based on the fused data, this system determines the actions the vehicle should take, such as changing lanes, adjusting speed, or emergency braking.

▶ Finally, the system executes the actual control commands for the vehicle's steering, acceleration, and braking.

The sensorfusion process enables autonomous vehicles to react more precisely to the various situations and scenarios they encounter on the road. This technology ensures that vehicles can drive safely and efficiently in complex road environments and serves as an important foundation for the development of future autonomous driving technologies.

Core Advantages of MCNEX Sensor Fusion Technology

Sensor fusion for autonomous vehicles is a technology that combines various sensor data to enable a comprehensive and accurate perception of the surrounding environment. Sensor fusion maximizes the strengths and compensates for the weaknesses of multiple sensor systems, helping vehicles make more precise decisions in real time.

Accurate Environmental Perception

Sensor fusion combines data from various sensors to enable a more complete perception of the environment. For example, while cameras provide visual images, LiDAR measures distance and speed, and radar measures the speed of objects. By integrating these data, vehicles can obtain a more accurate 360-degree view of their surroundings, which is essential for vehicle operation and safety.

Improved Reliability

A single sensor can produce errors under certain conditions or environments. Sensor fusion compensates for these weaknesses and corrects errors using data from other sensors. This enhances the overall reliability of the system and allows for more effective response to potential hazards.

Utilization of Complementary Information

Each sensor provides different types of data, which can sometimes be complementary. For example, LiDAR performance may degrade in fog or heavy rain, but radar can operate effectively in these conditions. Sensor fusion utilizes the strengths of each sensor to ensure that vehicles can effectively perceive their surroundings under all conditions.

Recognition in Adverse and Extreme Conditions

Sensor fusion helps vehicles recognize their environment in various weather and lighting conditions. For example, cameras may have limited performance at night or in low-light conditions, but infrared sensors or radar can function well in these situations.

Safety and Flexibility

The accurate and reliable information obtained through sensor fusion is essential for vehicle safety systems. It enables vehicles to respond quickly to changes in the environment, avoid potential collisions, and ensure the safety of drivers and passengers. Additionally, sensor fusion systems have the flexibility to combine information from various sensors and can easily integrate new sensor technologies as they become available.

Future Prospects - Integration and Advancement

With the advancement of sensor technologies, sensor fusion will possess even more sophisticated perception capabilities. For instance, the integration of AI and machine learning technologies will allow for faster and more accurate interpretation of sensor data, enabling vehicles to navigate more complex environments autonomously.

As the cost of advanced sensor technologies decreases, sensor fusion will become more accessible. This will accelerate the democratization of autonomous driving technology, contribute to the implementation of autonomous driving features across all vehicle grades, and significantly improve driving safety, especially at night or in poor weather conditions, by better environmental perception capabilities.

In the future, advancements in sensor fusion technology will enable vehicles to drive safely in almost all road conditions. The development of artificial intelligence and machine learning will make the processing of sensor data and decision-making processes faster and more accurate, dramatically enhancing the performance of autonomous vehicles. This will maximize road safety, improve traffic flow, and increase vehicle autonomy, enabling autonomous driving technology to operate safely without human intervention.