Here we share with you

various MCNEX stories.

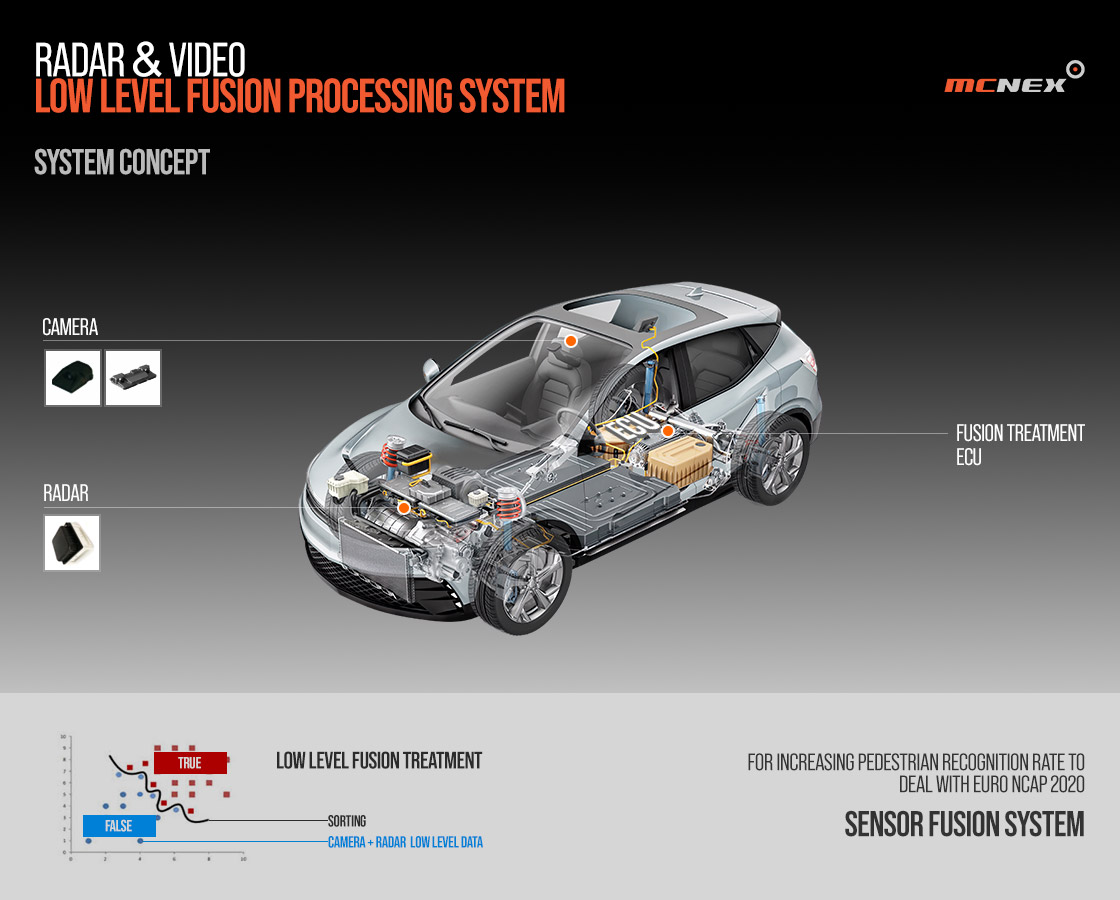

- 기술소식Radar & video LOW LEVEL fusion processing system DATE 2024-05-15

-

Radar & video LOW-LEVEL fusion processing system

Low-level “sensor fusion system” of video and radar to improve pedestrian recognition rate

As autonomous vehicle technology and city safety monitoring systems develop, the importance of pedestrian recognition technology is becoming more prominent. The low-level fusion processing system of radar and video is garnering attention to improve pedestrian recognition rates.

The low-level sensor fusion system of cameras and radar uses integrated data from both sensors to improve pedestrian recognition rates. The system combines the strengths of each sensor to help more accurately detect and identify pedestrians in the environment.

The role of camera and radar sensors

Camera sensor functions

Capture visual images of your surroundings using high-resolution cameras. This contributes to accurate recognition by capturing the shape, color, and movement of pedestrians in detail. However, Camera sensors may have limitations at night or in extreme weather conditions.

Importance of Radar Sensors

Radar sensors use electromagnetic waves to detect the location and speed of objects. These sensors perform particularly well at night or in adverse weather conditions, providing information that video sensors might otherwise miss. Radar is advantageous for accurately measuring dynamic information such as pedestrian speed and direction.

The low-level “sensor fusion system” compensates for the shortcomings of each sensor and integrates and processes data from these two sensors in real time. Data fusion is achieved through the following process.Radar & video LOW LEVEL fusion processing system principle

The radar & video LOW LEVEL convergence processing system is a technology that integrates and processes data obtained from cameras and radar sensors at the initial stage. Through this integration process, the strengths of each sensor are maximized and the weaknesses are compensated to enable more accurate pedestrian recognition.

1. Data Preprocessing

Data collected from each sensor is processed to be suitable for the fusion process through noise removal, normalization, and synchronization. Preprocessing of data is a critical step that determines the efficiency and accuracy of subsequent data fusion.

2. Data Fusion Algorithm

Fusion algorithms combine camera and radar data to produce more accurate information. This may include weight assignment, integrated inference, and optimization techniques, and is important for identifying the exact location, speed, and other dynamic characteristics of pedestrians.

3.Pedestrian Detection and Tracking

Detect and track pedestrians in real time based on integrated data. The machine learning and deep learning models used in this process continuously improve pedestrian recognition rates by learning from a variety of environmental data.

Application areas and expected effects

Sensor fusion systems can be used in a variety of fields, including self-driving cars, city safety surveillance, and robotics technology. Improved pedestrian recognition rates through this technology are expected to reduce traffic accidents, improve pedestrian safety, and increase the overall efficiency of the system.

As technology advances, the accuracy and efficiency of low-level sensor fusion systems will continue to improve. Advances in artificial intelligence and machine learning allow these systems to operate effectively in more complex environments, and as costs gradually decrease and systems become more compact, this technology is expected to be adopted in more applications.

Sensor fusion systems can be used in a variety of fields, including self-driving cars, city safety surveillance, and robotics technology. Improved pedestrian recognition rates through this technology are expected to reduce traffic accidents, improve pedestrian safety, and increase the overall efficiency of the system.