Here we share with you

various MCNEX stories.

- 기술소식Tri-Focal camera, a key sensor for autonomous driving DATE 2024-11-05

-

Tri-Focal Camera Systems

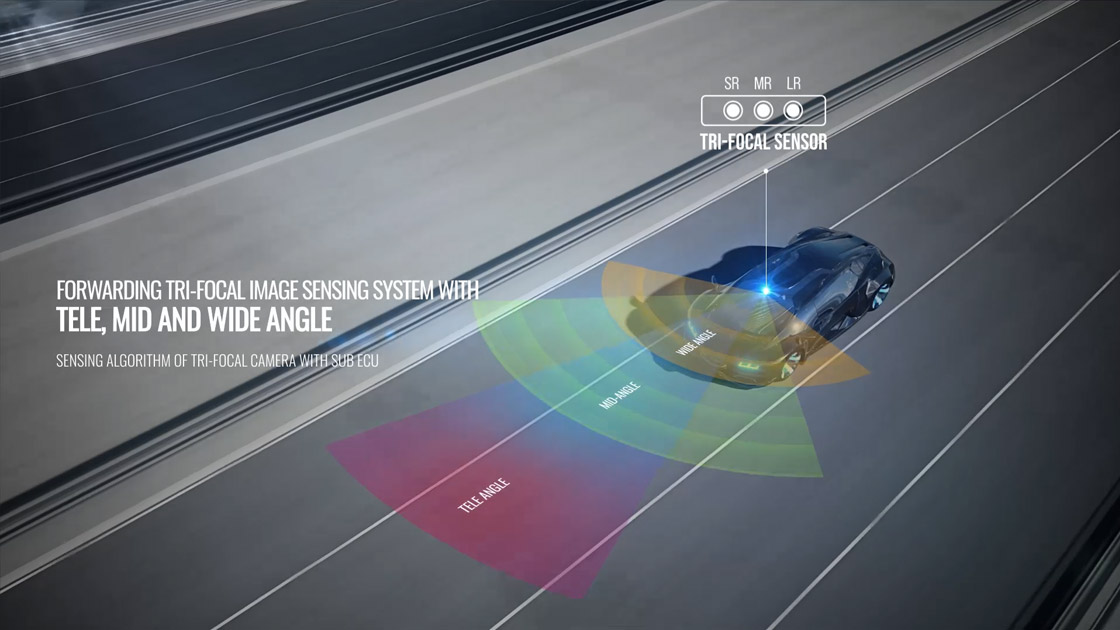

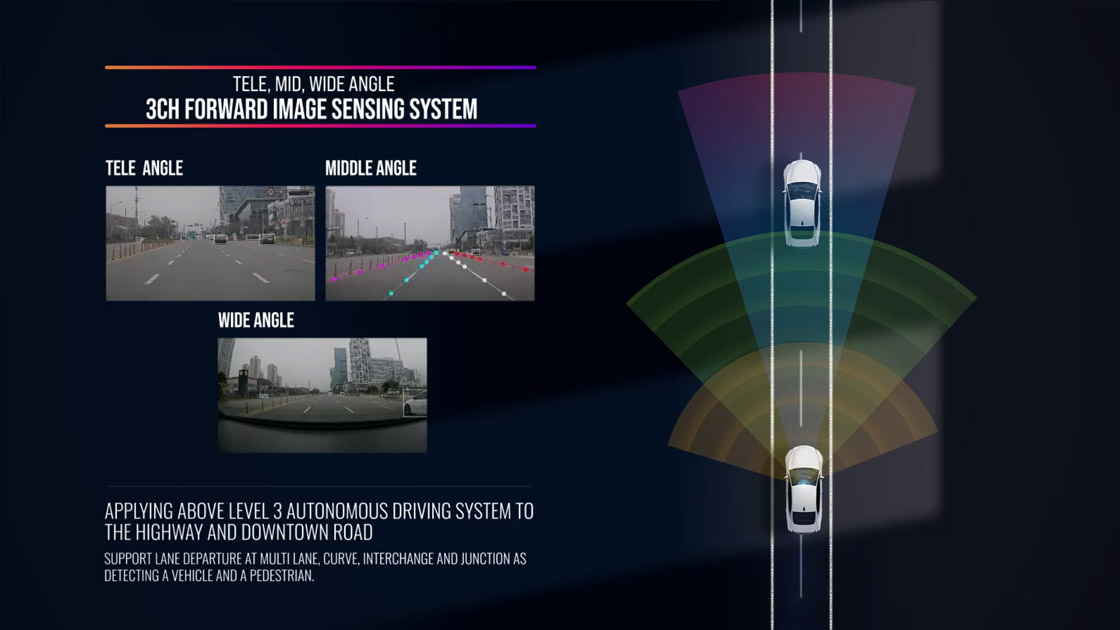

The drive toward fully autonomous vehicles depends on achieving accurate, real-time perception of the environment. Sensing solutions such as LiDAR, radar, and multi-camera systems play an important role in detecting objects and obstacles, estimating depth, and implementing complex driving maneuvers. The Tri-Focal camera system, which uses three cameras with short, medium and long focal lengths, combines high-resolution imaging with a wide field of view (FOV) and enhanced sensing capabilities to provide improved perception for autonomous driving.

1. Wide-Angle Camera (Short Focal Length): Detects and measures nearby objects and obstacles, making it ideal for parking, low-speed driving, and recognizing close objects like pedestrians and bicycles.

2. Standard Camera (Medium Focal Length): Monitors the area in front of the vehicle, assisting with lane changes and lane maintenance. Mid-range sensing is also crucial for assessing road conditions ahead and planning driving strategies for the immediate future

3. Telephoto Camera (Long Focal Length): Captures distant objects in detail, useful for monitoring the status of vehicles far ahead at high speeds, maintaining a safe distance, and detecting road hazards or traffic situations.

Key features of the Tri-Focal camera system

1. Depth Perception with Triangulation: Uses triangulation to calculate object distances by comparing images from cameras with different focal lengths, giving depth information for short, medium, and long ranges.2. Complex Road Situation Analysis: Supports functions like obstacle avoidance, lane changes, and highway merging by using multiple focal planes.

3. High-Resolution Object Detection: Enables advanced object detection and classification, distinguishing between pedestrians, vehicles, cyclists, traffic signs, and other objects.

4. Detailed Road Information: Captures road details, including lane markings and road boundaries, with wide-angle lenses for close views and telephoto lenses for distant details.

Future trends and developments in Tri-Focal camera technology

1. Integration with AI and Machine Learning

Future Tri-Focal camera systems will likely integrate more advanced AI and machine learning algorithms, enhancing object recognition and decision-making capabilities in real time. This will improve the vehicle’s ability to identify complex objects and predict their movements.

2. Improved Depth Perception and Resolution

Advances in sensor technology will increase the resolution and depth accuracy of Tri-Focal cameras, allowing for more precise detection of distant objects and small details on the road, even under challenging conditions like low light or bad weather.

3. Fusion with Other Sensors

Tri-Focal camera systems may be combined with other sensors, such as LiDAR and radar, for a more comprehensive view of the surroundings. This sensor fusion will create a more reliable perception system, compensating for the limitations of each individual sensor.

4. Miniaturization and Cost Reduction

As technology evolves, Tri-Focal camera systems are expected to become smaller, more energy-efficient, and less expensive, making them easier to integrate into a wider range of vehicles, including lower-cost models.

5. Enhanced Autonomous Driving Functions

With better object detection and depth perception, future Tri-Focal cameras will support more advanced autonomous driving features, such as precise lane keeping, automated highway merging, and safe navigation through complex urban environments.

These trends will likely make Tri-Focal camera systems a more integral part of autonomous driving technology in the years to come.